Adaptive Whole-body Robotic Tool-use Learning

on Low-rigidity Plastic-made Humanoids Using Vision and Tactile Sensors

ICRA 2024

- Kento Kawaharazuka

- Kei Okada

- Masayuki Inaba

-

JSK Robotics Laboratory, The University of Tokyo, Japan

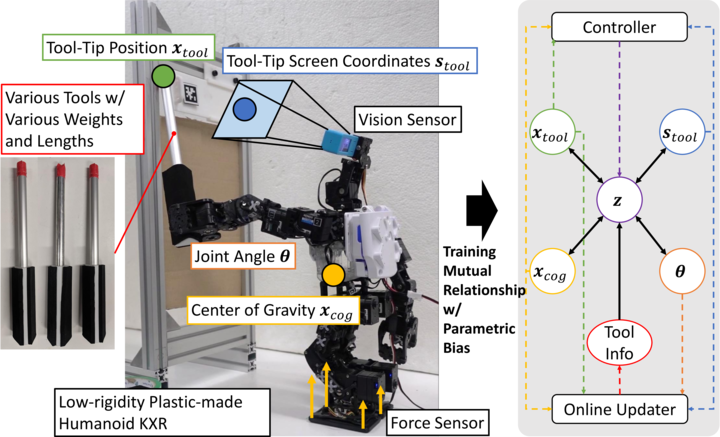

Various robots have been developed so far; however, we face challenges in modeling the low-rigidity bodies of some robots. In particular, the deflection of the body changes during tool-use due to object grasping, resulting in significant shifts in the tool-tip position and the body's center of gravity. Moreover, this deflection varies depending on the weight and length of the tool, making these models exceptionally complex. However, there is currently no control or learning method that takes all of these effects into account. In this study, we propose a method for constructing a neural network that describes the mutual relationship among joint angle, visual information, and tactile information from the feet. We aim to train this network using the actual robot data and utilize it for tool-tip control. Additionally, we employ Parametric Bias to capture changes in this mutual relationship caused by variations in the weight and length of tools, enabling us to understand the characteristics of the grasped tool from the current sensor information. We apply this approach to the whole-body tool-use on KXR, a low-rigidity plastic-made humanoid robot, to validate its effectiveness.

Whole-body Robotic Tool-use Learning for Low-Rigidity Robots

The concept of this study: learning the mutual relationship among joint angle, center of gravity, tool-tip position, and tool-tip screen coordinates for adaptive whole-body tool-use of low-rigidity robots considering the changes in tool weight and length.

System Overview

System overview of Whole-body Tool-use Network with Parametric Bias (WTNPB) including Data Collector, Network Trainer, Online Updater, and Tool-Tip Controller for Low-Rigidity Robots.

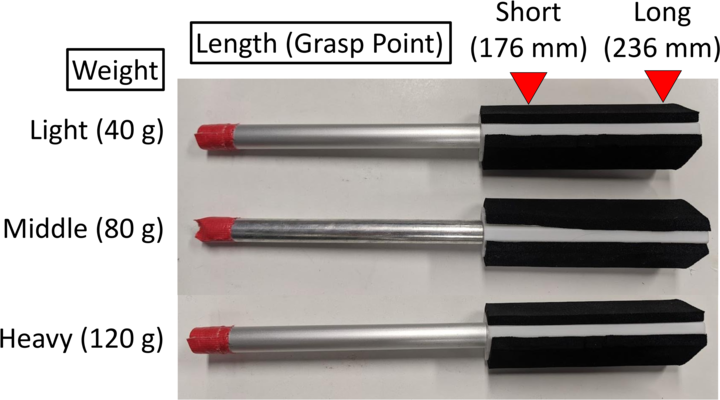

Experimental Setup

Six types of tool states with various weights and lengths used in this study.

The change in tool-tip position and center of gravity when handling tools with different weights.

Simulation Experiments

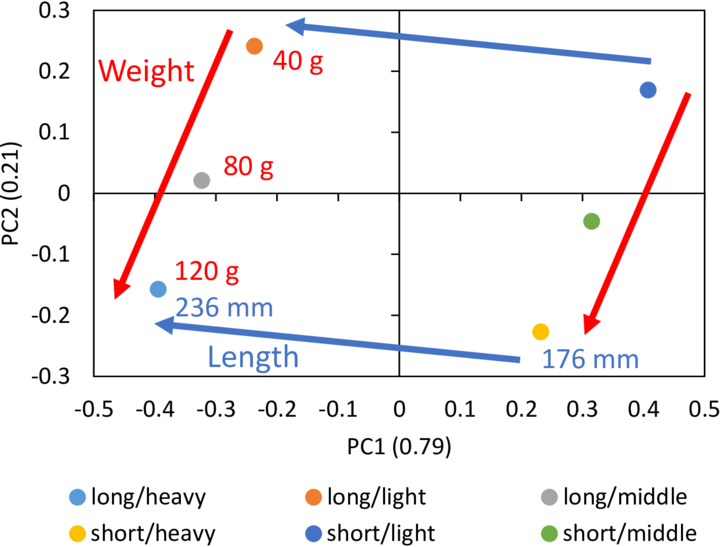

The trained parametric bias in the simulation experiment. It can be observed that each PB aligns neatly along the axes of tool weight and length.

The trajectory of parametric bias when PB is updated online while sending random joint angles to the robot. It can be observed that the current PB values gradually approach the trained PB values for Long/Light or Short/Heavy.

We examined how PB values transition in three scenarios of data availability.

Finally, we conduct control experiments incorporating online update of PB. We initiate the current PB as Long/Light, and sequentially transition the actual tool states to Long/Heavy and Short/Heavy. It can be observed that PB transitions from Long/Light to Long/Heavy and then to Short/Heavy as intended. Initially in the Long/Heavy state, we can see a slight reduction in control error and a significant decrease in COG error by the update of PB. In the subsequent transition to the Short/Heavy state, significant changes in control error are observed, while there is no significant variation in COG error.

Actual Robot Experiments

PB was updated online while setting the tool state to Long/Light or Short/Heavy, and sending random joint angles to the robot. It can be observed that the current PB gradually approaches the trained PB of Long/Light or Short/Heavy.

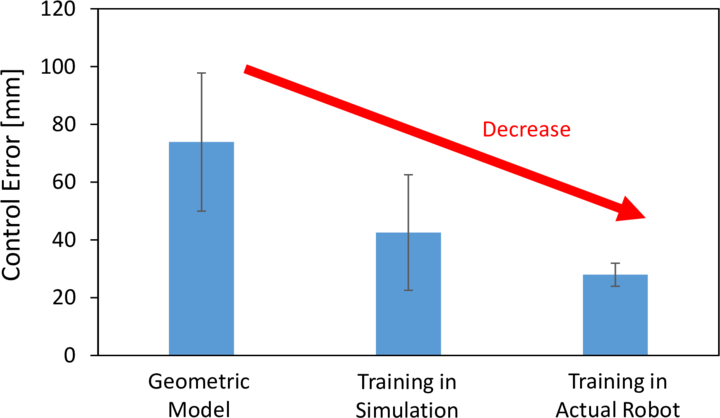

We compared control errors for the Long/Middle tool state when solving whole-body inverse kinematics using a geometric model, when using WTNPB trained with the simulation data including joint deflection, and when using WTNPB fine-tuned with the actual robot data. The geometric model exhibits the largest error, followed by training from the simulation data with joint deflection, and finally, the smallest control error is achieved after fine-tuning in the actual robot.

Integrated Experiment

We conducted an integrated experiment that combined online update and tool-tip position control on the actual robot. The task involved using the tool to open a window located at a high position. We initiated the PB in the Short/Heavy state and the robot grasped the Long/Light tool. While performing random movements, the current PB was updated online and then the robot positioned itself in front of the window by walking sideways. The 3D position of the window was recognized from an AR marker attached to it. Subsequently, the robot extended the tool-tip position to the left of the window by 60 mm and then moved it 80 mm to the right to open the window. The entire sequence of actions was successful, demonstrating the feasibility of a series of tool manipulation tasks on a low-rigidity robot.

Bibtex

@inproceedings{kawaharazuka2024kxr,

author={K. Kawaharazuka and K. Okada and M. Inaba},

title={{Adaptive Whole-body Robotic Tool-use Learning on Low-rigidity Plastic-made Humanoids Using Vision and Tactile Sensors}},

booktitle="2024 IEEE International Conference on Robotics and Automation",

year=2024,

}

Contact

If you have any questions, please feel free to contact Kento Kawaharazuka.